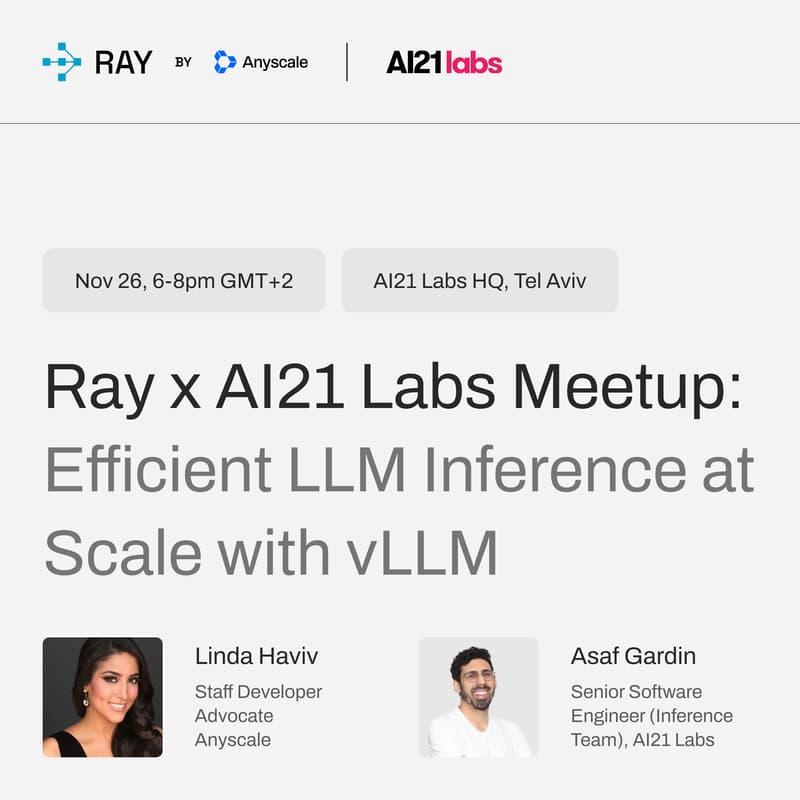

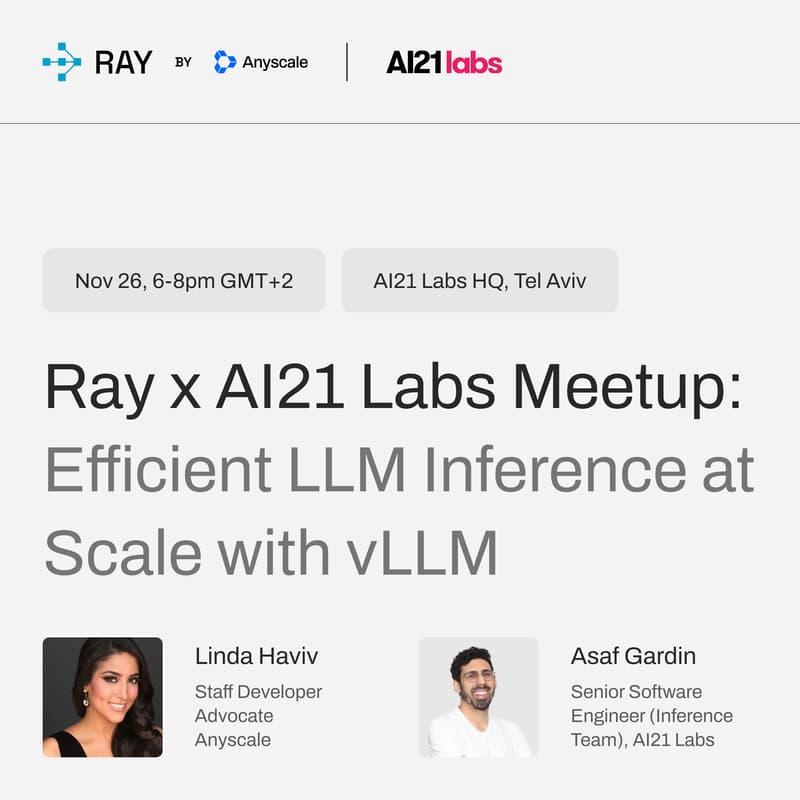

Ray x AI21 Labs Meetup: Efficient LLM Inference at Scale with vLLM

Large language models are transforming the way we build AI applications, but serving and scaling them efficiently is still one of the hardest problems in MLOps. Join us for an evening with Ray and AI21 Labs to explore how open-source tools like Ray and vLLM make large-scale inference faster, more cost-effective, and easier to manage in production.

You'll hear from experts at Anyscale and AI21 Labs as they dive into real-world challenges and solutions for running LLM workloads, from batch inference pipelines to dynamic, high-throughput serving.

6:00 PM – 6:30 PM — Welcome & Networking

6:30 PM – 7:30 PM — Talks & Demos

Efficient LLM Serving with vLLM — Asaf Gardin, Senior Software Engineer (Inference Team), AI21 Labs

Discover how vLLM achieves dynamic, efficient inference through features like PagedAttention, continuous batching, and KV cache management. This talk will break down how vLLM delivers high-throughput, cost-effective serving for models including hybrid architectures like Jamba.Scaling LLM Batch Inference with vLLM + Ray — Linda Haviv & Ali Sezer, Anyscale

Learn how Ray orchestrates CPU and GPU workloads to efficiently run batch inference at scale, ensuring GPUs stay fully utilized even when processing multi-modal data. We'll cover practical examples including embeddings and evaluation workloads for text, video, and audio using Ray Data.Q&A + Networking

Whether you're building your first LLM service or optimizing large-scale deployments, this is a great chance to learn from practitioners solving the hardest scaling challenges in AI today, and to connect with the local Ray and MLOps community.

About Anyscale

Anyscale, the company behind Ray open source, is a fully-managed, enterprise-ready unified AI platform. With Anyscale, companies can build, deploy, and manage all their AI use cases, bringing transformational AI products to market faster.

Try it for free here.

Join the Ray Community

Join the Ray Community

Follow Anyscale on Linkedin & Twitter / X

We are hiring! Check out the job openings